I try to post a few times each month, but somehow January (and most of February) fell through the cracks. Lately I’ve been busy with operational tasks, which hasn’t left me much room for engineering. I haven’t solved any particularly hard or unusual problems, which is usually what I write about. Instead, I’ll write about a routine problem that is nonetheless tricky enough to warrant discussion.

Most of the time I’m not in the same country as the servers I administer. Which means I can’t just drive down and fix something when it goes wrong. It also means that making changes to the network is particularly dangerous. So is updating the kernel, initrd, or GRUB configuration. It is possible to leave a server in a state that requires you to be physically present to fix it. I call this kind of work “flying without a net”. Here are my techniques for safely working without console access.

The most useful tool in my bag is GNU screen. Screen acts as a window/terminal manager inside a terminal. This means you can open one SSH session to a server but start multiple bash logins and multi-task from one terminal window. What’s more, screen is persistent. This means you can disconnect from it and return later, and your programs are still running. Even if your network access is interrupted, anything running in screen will complete rather than terminating when your login session does.

Screen is the safest way, short of console login, to make network changes. Want to run “ifdown eth0 ; ifup eth0“? Try it and you’ll likely find that the first command will interrupt the network and the second command will never run. Your server is now off the network, and you can’t fix it without console access. If you had used screen, you wouldn’t be ringing the on-call staff and suffering a service outage.

For me, the most common way to lose access to a server is to make a mistake while changing the network configuration. This could be the firewall, the IP configuration, tunable kernel networking parameters (sysctl), or something as simple as stopping the OpenSSH server. The first rule is: the server always boots in a known good configuration. Don’t write changes to any startup configuration files until they are tested. When testing, tell the server to restart if the new configuration is bad.

How do you do this? With shutdown -r +5m. This will instruct the server to reboot in 5 minutes. Then, make your changes. If they work, cancel the shutdown with shutdown -c. If they don’t, the server will reboot in 5 minutes on the known, working configuration. Five minutes of down time may be unpleasant, but it is a lot better than sending an engineer.

This means you must test your configuration changes with temporary files. Most commands that use configuration files take an argument to read an alternate file.

cp /etc/network/interfaces /etc/network/interfaces.new

vi /etc/network/interfaces.new

ifdown eth0

ifup -i /etc/network/interfaces.new eth0

Another simple trick is to have a private IP address configured on a virtual interface (eth0:1). Do this for all your servers. If you lose access to one server, you can SSH into another on the same LAN, then SSH into the affected server using the private IP. In rare cases I’ve set a bad route or default gateway, but found I could reach the server from another on the same IP subnet.

Always keep an active SSH session when making changes to the OpenSSH server. Don’t test by logging out of your current shell and then logging in again. Open a new terminal and test in that one. The OpenSSH server only controls new SSH sessions, not existing ones. So you can upgrade or restart the SSH service without losing your open shell.

This applies to firewall changes as well. A typical firewall config accepts all existing, recognised connections before filtering new ones. If you firewall port 22/tcp, you may not be able to open a new session, but your existing ones will probably continue to work.

Install webmin. If you break the OpenSSH server or run afoul of your own firewall, you may be able to login via webmin and fix it. Webmin has native brute-force protection and uses SSL, so you aren’t decreasing your security by installing it. It has saved me more than once.

Be wary of anything that affects the boot process itself. That means GRUB, the kernel, and the initrd image. If you use software RAID on the root partition, make sure that initrd image has all the tools you may need to boot:

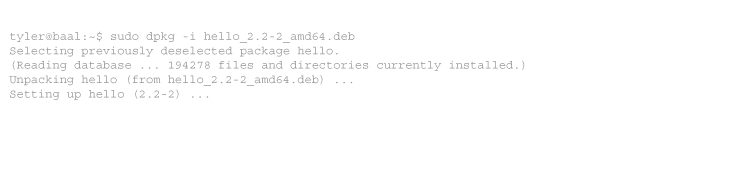

apt-get install mdadm evms dmsetup lvm2

In general, it is safe to upgrade or reboot if you use your distribution’s standard software repositories and don’t change the default configurations. If you compile your own kernel or do anything fancy with GRUB, ensure you have console access.

If you have the resources, model changes to a server in a virtual machine first. Today, “resources” only means a mid-range laptop and Virtualbox. Create a virtual network environment that is identical to the real one, using the same IP addresses but only accessible to you. Test your changes, then apply them to the real server only if they work.

These techniques are no substitute for professional server administration. Standard policy at my company is that all servers are installed on network-aware PDUs and connected to IP-capable KVM units. This means I can remotely reboot a server by the power switch, or login as if I were on a local keyboard and monitor. This means I have access to BIOS, bootable network/RAID cards, the GRUB boot menu, a minimal recovery shell (such as when fsck fails), or the regular console. You can’t get any of that with GNU screen or any tool that runs on the server itself.

If you have more than four physical servers, installing a network PDU and KVM will cost less than 15% of the servers themselves and will save you a lot of trouble in on-site support fees and lost time. And they will allow your engineers to do scheduled maintenance at late hours from home.

Tags: networking, screen, virtualbox

No comments

Comments feed for this article

Trackback link: https://www.tolaris.com/2010/02/28/flying-without-a-net/trackback/