I recently discussed how to use LVM to make a live copy of a BackupPC pool. That guide covers how to set up LVM on a new server with no data. But what if you already have a working BackupPC install, and you want to move your existing pool to LVM?

I wrote a post 18 months ago on moving a Linux root partition to software RAID. Moving to LVM shares a lot of the same problems. The differences are:

- We’re not touching the root (or /boot) filesystem. Therefore we don’t have to deal with BIOS or GRUB. In fact, this entire process can be done without rebooting.

- We must copy the filesystem bit-for-bit instead of using file-aware tools like rsync, cp, or tar. This is due to the design of the BackupPC storage pool.

If you have another disk to use as a swap space, this problem is easy to solve. Simply unmount your backuppc partition and use dd to clone the data to the new drive. Then make create a logical volume on the old partition and copy it back.

If you don’t have another disk, you must convert the disk layout in place. The instructions in this post can be used to move any filesystem to LVM; just disregard the parts specific to BackupPC. I assume that your server’s drive and filesystem layout is as described in my last post:

- /dev/sda provides the root filesystem (and possibly others). We’re not concerned about it here.

- /dev/sdb is a RAID array with one partition. It does not yet use LVM, and contains an ext3 filesystem mounted on /var/lib/backuppc.

The general plan is:

- Reduce filesystem usage on /var/lib/backuppc to 49% or less

- Resize the filesystem and partition to 49%

- Create a second partition and logical volume with the free space

- Copy the filesystem to the logical volume

- Add the first partition to the logical volume

- Expand the logical volume and filesystem

- Mount the logical volume

Warning: this is a multi-day process with many long delays for large data transfers. I’ll identify the delays as we go along. Never interrupt a filesystem operation unless you know exactly what you are doing. I recommend executing all long-running processes in a GNU screen session in case your network connection is interrupted.

Step 1: Reduce filesystem usage on /var/lib/backuppc to 49% or less

If your BackupPC pool filesystem is larger than 49%, you must reduce disk usage.

- Remove old backups. Reduce the BackupPC variables $Conf{FullKeepCnt} and $Conf{IncrKeepCnt} to keep fewer backups. Alternately, use a script like BackupPC_deleteBackup.sh to delete old ones manually.

- Set $Conf{BackupPCNightlyPeriod} = 1

- Wait for the next BackupPC_Nightly run. BackupPC will purge old backups and clean the pool of unnecessary files. This may take several days, but during this time you can perform backups as normal.

Do not proceed until filesystem usage is below 49%. Verify this with df.

Step 2: Resize the filesystem and partition to 49%

You may wish to read this guide first. It is possible to use the parted “resize” function for this, but it doesn’t work for all filesystems. We’ll do it manually.

- Stop BackupPC. From this point no new backups will be kept until the entire process is finished, which may be several days.

/etc/init.d/backuppc stop - Unmount the partition

umount /var/lib/backuppc - Get the block size and block count for the partition, and calculate the new size in K.

tune2fs -l /dev/sdb1 | grep BlockBlock count: 2620595 Block size: 4096

I’ve used a 10 GB partition in this example, and we now know that this filesystem consists of 2620595 blocks of 4K size. Calculate the new size as follows:

new size = (current size in blocks) * (block size in K) * 0.49

Round this number down. Our new size will be 2620595 * 4 * 0.49 = 5136366 K.

- Check the filesystem. This may take several hours or days.

fsck -f /dev/sdb1 - Resize the filesystem.

resize2fs /dev/sdb1 5136366Kresize2fs 1.40.8 (13-Mar-2008) Resizing the filesystem on /dev/sdb1 to 1284091 (4k) blocks. The filesystem on /dev/sdb1 is now 1284091 blocks long.

- Resize the partition by deleting it and creating a new one of 5136366K.

fdisk /dev/sdbCommand (m for help): p Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0x0005eeec Device Boot Start End Blocks Id System /dev/sdb1 1 1305 10482381 83 Linux Command (m for help): d 1 Selected partition 1 Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1305, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-1305, default 1305): +5136366K Command (m for help): p Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0x0005eeec Device Boot Start End Blocks Id System /dev/sdb1 1 640 5140768+ 83 Linux Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks.

Step 3: Create a second partition and logical volume with the free space

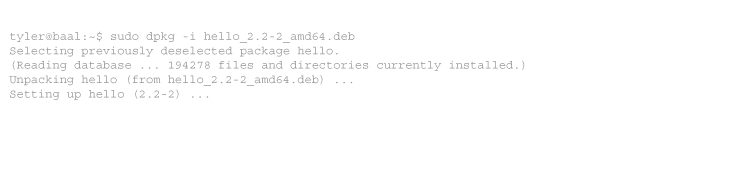

- If you haven’t already, install LVM.

apt-get install lvm2 - Use fdisk again to create a second partition. You can combine this step with the last one. Hit enter at the prompts to use all available space, and change the new partition to type 8e, Linux LVM.

fdisk /dev/sdbCommand (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 2 First cylinder (641-1305, default 641): Using default value 641 Last cylinder or +size or +sizeM or +sizeK (641-1305, default 1305): Using default value 1305 Command (m for help): p Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0x0005eeec Device Boot Start End Blocks Id System /dev/sdb1 1 640 5140768+ 83 Linux /dev/sdb2 641 1305 5341612+ 83 Linux Command (m for help): t Partition number (1-4): 2 Hex code (type L to list codes): 8e Changed system type of partition 2 to 8e (Linux LVM) Command (m for help): p Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0x0005eeec Device Boot Start End Blocks Id System /dev/sdb1 1 640 5140768+ 83 Linux /dev/sdb2 641 1305 5341612+ 8e Linux LVM Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks.

- The second partition must be equal to or larger than the first partition. If it is not, check your math or try resizing to a smaller percentage and try again.

- Create a volume group and logical volume.

pvcreate /dev/sdb2

vgcreate volgroup /dev/sdb2

lvcreate -l 100%VG -n backuppc volgroup

Step 4: Copy the filesystem to the logical volume

- Use dd, the sysadmin’s oldest friend, to copy the filesystem bit-for-bit from the old partition to the new. This may take several hours or days.

dd if=/dev/sdb1 of=/dev/volgroup/backuppc - If you get impatient during the copy, you can force dd to output the current progress. Find the process ID of the running dd, and send a USR1 signal.

ps -ef | grep ddroot 4786 4642 26 15:44 pts/0 00:00:01 dd if /dev/sdb1 of /dev/volgroup/backuppc

kill -USR1 478618335302+0 records in 18335302+0 records out 9387674624 bytes (9.4 GB) copied, 34.6279 seconds, 271 MB/s

- A neater way to do this is:

dd if=/dev/sdb1 of=/dev/volgroup/backuppc &

pid=$!

kill -USR1 $pid - When dd finishes you will see one last progress report which should total the size of /dev/sdb1.

Step 5: Add the first partition to the logical volume

We’ve copied our data to the logical volume, so we no longer need the original partition. Add it to the volume group.

- Use fdisk to change partition 1 to type 8e.

fdisk /dev/sdbCommand (m for help): p Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0x0005eeec Device Boot Start End Blocks Id System /dev/sdb1 1 640 5140768+ 83 Linux /dev/sdb2 641 1305 5341612+ 8e Linux LVM Command (m for help): t Partition number (1-4): 1 Hex code (type L to list codes): 8e Changed system type of partition 1 to 8e (Linux LVM) Command (m for help): p Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0x0005eeec Device Boot Start End Blocks Id System /dev/sdb1 1 640 5140768+ 8e Linux LVM /dev/sdb2 641 1305 5341612+ 8e Linux LVM Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks.

- Add it to the volume group.

pvcreate /dev/sdb1

vgextend volgroup /dev/sdb1

Step 6: Expand the logical volume and filesystem

We’ve moved the data to a logical volume and added all the disk space to the volume group. Now we expand the logical volume and filesystem to use the available space.

- Extend the logical volume. I advise using only 75% so you can use LVM snapshots.

lvextend -l 75%VG /dev/volgroup/backuppc - Resize the filesystem to use the entire logical volume. This may take several hours or days.

resize2fs /dev/volgroup/backuppc - If at any point you’ve mounted the filesystem, you’ll have to run a new fsck before resizing. This will cause another long delay, so try to avoid it.

Step 7: Mount the logical volume

We’re now ready to mount the new logical volume on /var/lib/backuppc and restart BackupPC.

- Edit /etc/fstab and replace any references to /dev/sdb1 with /dev/volgroup/backuppc. If your fstab uses UUIDs instead of explicit device paths, you shouldn’t have to change anything.

- Mount the filesystem.

mount /var/lib/backuppc - Restart BackupPC

/etc/init.d/backuppc start

You have successfully migrated to LVM!

-

I’m going to confess to a horrible thing I did.

I converted to LVM. In place. Without first reducing disk usage to 49%. It was a bit of a Zen exercise, and the data wasn’t at all valuable, obviously, because no one should expect that sort of thing to work.

Tools:

1. a script to reverse a block device in 4M chunks (included with linux kernel documentation, needs adjustment)

2. dd

3. cp –sparse=always

4. losetupI’m sure you can figure out the process (free last extent of disk. set up reversed lvm device at end of disk. copy the last extent of the file system into the reversed lvm device, then grow the reversed lvm device at its logical end, and its physical beginning. repeat. set up a normal lvm device at the start of the disk, migrating extents there from the reversed lvm device, which will shrink to zero and leave you, ideally, with something you could have done in ten minutes with a backup disk.

The cp –sparse=always comes in if you have to use intermediate file systems because a file system was unshrinkable – you can still effectively shrink it by separating it out into chunks stored in sparse files in another file system and keeping it as zeroed-out as possible.

Forgive me father, for I have sinned.

-

It did work, though it was sheer dumb luck that none of the many, many dd invocations went horribly wrong.

But, seriously: why is this so difficult? My impression is ext[234]fs is pretty good at not using certain areas of the disk (historically, for badblocks). In theory, it should be as easy as this:

0. unmount as file system

1. create an extent-sized file of zeroes, and make sure it is extent-aligned: thus, you’ll have a free extent to shift things to.

2. copy the first file system extent into the zeroed extent

3. mark the duplicate extent as bad, preventing the file system from using it. delete the zeroes file.

4. overwrite the first file system extent with LVM header data, remapping the first extent to the new copy, the other extents 1:1, and the bad extent to an error/zeroes entry

5. mount as LVMThat would take 10 seconds, it would reduce your file system size by one extent (though you could always resize2fs and use the last extent to avoid the 4M marked as bad artifact), and it would actually be safer than anything that involves rewriting everything twice (obviously, any file system-modifying code should be considered extremely dangerous for the first few hundred times you run it). Also note that it splits nicely into stuff that only involves the file system and a pure LVM operation that turns a partition into an LVM pv containing the partition.

I’m afraid my point is that I’m a grumpy old man, and it’s 2010 and we still have exciting new features added in a way that makes migration unbelievably difficult. A file system that you want to turn into, essentially, a bunch of files on a new file system you create around it isn’t the exception, it’s the rule for any exciting migration.

Want your file system encrypted? Essentially, you’ve got to go through the same thing again, because some more metadata wants to be stored at the beginning of the image.

Want your LVM layout encrypted? Your best bet is LVM over crypto over LVM, so that’s the same procedure again (and another extent lost, but that’s hardly an issue).

Sorry for ranting. It’s good to have readable instructions for the common, using-less-than-49% case, and thanks for going to the trouble of writing those.

-

IMHO, LVM is just that, a couple of extra blocks at the beginning of the partition. So I would have thought it should be simple enough to:

1) reduce the fs by a couple of blocks (say 4M or whatever)

2) move the entire fs to the ‘end’ of the partition (move the second last block to the last block and so on until the first block is free)

3) create the LVM headers/etc at the beginning of the partitionAll done… even if the filesystem was originially 99% full, and only one complete copy of the data. I’ve certainly managed to successfully complete the reverse of the above (recovering a filesystem from a corrupted LVM, where there was only a single filesystem on a single PV, etc… using mount -o loop …. and telling losetup to skip the first few blocks… don’t see why it couldn’t work in reverse.

PS, if your data is important, don’t do it, it isn’t worth it :)

-

There’s an in-place approach that works for most common filesystem types (ext[2-4], btrfs, and reiserfs all of which have shrink support); the idea is to move one PE from the start to the end of the partition, and inject LVM configuration in the freed space (superblock label and PV metadata area, with an LV that puts the moved PE at the correct logical address). For filesystems that support badblocks but not shrinking, reserving contiguous data through a file and badblocking it might also work to free the PE space, but there should be some simpler approaches that work on multiple partitions: find one partition with a shrinkable filesystem or discardable swap space, and use it to generate a PV that covers multiple partitions; then edit the partition table. So far I have implemented the simple, single-partition approach (lvmify, link on the username).

-

-

6 comments

Comments feed for this article

Trackback link: https://www.tolaris.com/2010/05/06/moving-an-existing-backuppc-partition-to-lvm/trackback/