We recently moved our primary backup machine to another location, and have overhauled a lot of our network infrastructure. This meant a lot of work updating the Bacula configurations on those machines. I’ve been unhappy with Bacula for some time, so I replaced it with BackupPC.

Bacula is very complicated. That complication provides flexibility – if you want your level-0 backups to run on Tuesdays at 1900 and Thursdays at 22:30, and to exclude some files on Wednesdays only, it can – but it also makes it brittle. One daemon hangs for any reason, and half the backups never complete. It scales well to very large enterprise sizes, but I don’t really need that yet.

What Bacula does really well is dump data to tape. I don’t use tape. I use a RAID array. When you dump data to tape, you do it in series. But when you dump data to disk, you can just as easily do it in parallel. The process of calculating checksums and compressing files limits the rate at which you actually transfer those files to the backup store. With most of my servers, that turns out to be about 20 mbit/sec at max. But my servers are typically connected via gigabit LAN, so why not backup several at the same time? Further, my backup window is limited to 12 hours at night so as to avoid affecting normal traffic during the day. If you are running backups in series and one takes 4 hours, the other backups run the risk of pushing into the morning work hours.

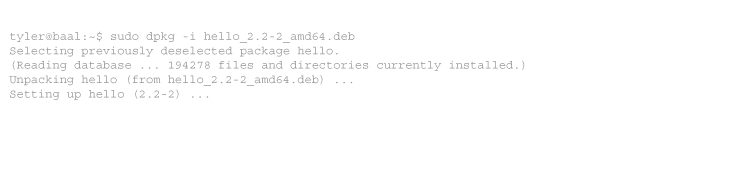

How to install and configure BackupPC is already well documented elsewhere. Where my install differs is that we don’t run Windows, so we don’t have to deal with backing it up. All of my defaults are configured to use rsync and expect Linux filesystems.

What BackupPC does really well is just back stuff up, to disk, whenever it can. It is possible to force exactly when backups run, but in general the system isn’t designed that way. I tried to force everything to kick off at 20:00 UTC nightly, and run until finished. But by default, BackupPC is configured to try to backup every hour on the hour, and to run the next backup 24 hours after the last one finished. I soon realised that it was best to do it that way. When a server is down or the backup fails, BackupPC tries again every hour until it succeeds. That is far more robust than “the backup failed last night, so we have to wait until next night”.

So what about not running backups during the day? It can do that too. You can specify exactly which hours to wake up and perform pending jobs:

$Conf{WakeupSchedule} = [ '19', '20', '21', '22', '23', '0', '1', '2', '3', '4', '5', '6' ];

But a better way is to run every hour anyway, and instead use blackout periods:

$Conf{WakeupSchedule} = [ '12', '13', '14', '15', '16',

'17', '18', '19', '20', '21', '22', '23', '0', '1',

'2', '3', '4', '5', '6', '7', '8', '9', '10', '11' ];

$Conf{BlackoutBadPingLimit} = '3';

$Conf{BlackoutGoodCnt} = '7';

$Conf{BlackoutPeriods} = [ {

'weekDays' => [ '0', '1', '2', '3', '4', '5', '6' ],

'hourBegin' => '6.5'

'hourEnd' => '18.5',

} ];

Now jobs run every hour. But for any host that has stayed online for 7 days (tested by ping, where 3 are sent before declaring failure), backups will not start between 6:30 and 18:30. Unfortunately all times are hours, so you must use decimal HH.HH instead of HH:MM. You’ll only make a few mistakes before you get used to it.

The advantage to the blackout system is that it backs up a recently failed server within an hour of it coming online again, even during the day, while restricting backups of functioning servers to the later hours. Servers just added to the system may also back up during the day because they don’t have 7 good pings yet. The easy solution is to add new servers at night. Backups tend to stay near to when they last ran, but can move within the normal job period.

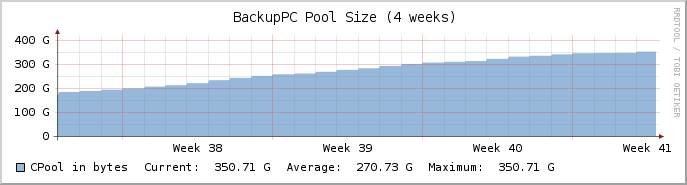

While backup times can move around, every server is still backed up every 24 hours, at some point during the night. Now that I’ve run this for 5 weeks, on 47 servers, I’m very pleased with it. It has proven more reliable than Bacula, and the disk usage is far, far better. BackupPC compresses and pools stored files, and uses hashes to determine if a file needs to be archived again. If you have multiple copies of the same file, across all servers, you only have one on disk on the BackupPC server. Backing up every copy of /usr/ suddenly makes no difference at all. From the web interface:

There are 47 hosts that have been backed up, for a total of:

* 177 full backups of total size 1331.97GB (prior to pooling and compression),

* 395 incr backups of total size 783.92GB (prior to pooling and compression).

Pool is 337.28GB comprising 4081543 files and 4369 directories (as of 2009-10-08 13:46),

Pool hashing gives 543 repeated files with longest chain 21,

Nightly cleanup removed 63257 files of size 37.75GB (around 2009-10-08 13:46),

Pool file system was recently at 29% (2009-10-08 21:25), today's max is 29% (2009-10-08 12:00)

and yesterday's max was 29%.

That’s 5 weeks of weekly level-zeroes plus the last 14 daily incrementals. The same data in Bacula for only 27 servers came to 900 GB. BackupPC is configured to keep backups going back 5 months; at best I could only manage a month of Bacula backups for the same period.

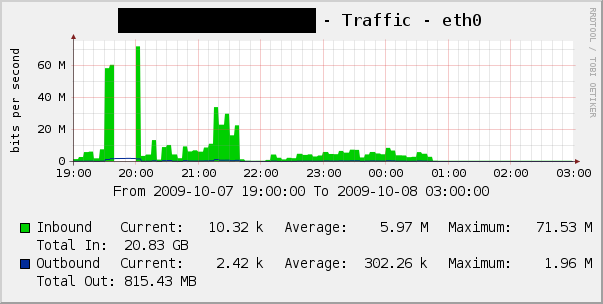

What about data throughput? Bacula topped out at about 20 mbit for even gigabit-connected hosts, and it could only run in serial (unless I configured multiple “storage devices” (directories), but there is no way to pool them for dynamic use. Allowing only 3 simultaneous backups, the server routinely exceeds 60 mbit of traffic during the first hour of the run.

Unfortunately I don’t have useful Cacti graphs from the time we ran Bacula, but it was at best 80% as fast for only one backup. And of course it could run only one.

What else does BackupPC have over Bacula?

- A good web interface. Yes, webacula exists, but you are still limited to editing config files and using an interactive terminal interface to do anything useful. With BackupPC you can use any standards-compliant browser to review statistics and logs about recent backups, edit the config, browse backups in tree format and restore from them, and start additional backups outside of the normal schedule. You can also grant users the ability to browse and schedule backups for specific servers or desktops, but not see any others.

- Inline help. When editing BackupPC’s config in the web GUI, all configuration variables are hyperlinks to the HTML documentation.

- Several ways to restore. You can directly restore file to the server by the same transport that you use to backup (rsync, ssh+tar, SMB). You can also download a ZIP or tar.gz through your browser.

- No database dependency. The filesystem is the database. Files are stored in disk trees, and logs are text on disk. I’m not against using MySQL, but it adds one more point of failure.

- No client daemon. That’s right, nothing to install on the clients. Just add the server’s SSH key to your root user, and rsync does the rest. If you think that’s a security risk, it is. Any other backup system has the same vulnerability. I suggest using key access limits.

I’ve tested basic restores and bare-metal ones. So far there are no issues with BackupPC, and I’m much happier using it. It is simpler, less prone to failure, and less likely to require user intervention. It may not scale to 100 distributed backup stores with tape drives, but it is excellent for the small-to-medium enterprise.

-

I’m also using BackupPC and the only big disadvantage I see: There is no easy way to duplicate the pool. Storing backups to tape (by creating TAR archives via built-in commands) is very time-consuming.

It is also important to point out that BackupPC does stress the file system a lot! ext3 seems to be a poor choice. Currently, people favor xfs, reiserfs (which is mostly unmaintained) or zfs (which is Solaris-only at this time).

Thanks for the report,

Tino.

PS: I’m using Bacula “behind BackupPC” for having tape and offsite backup. And I’ve had issues with jobs hanging etc. with Bacula…

-

Excellent point of view, I’m also a big fan of BackupPC and I’ve been using it in production environments for some time now, some with several terabytes of info (the largest pool is about 6TB) and it works perfectly.

Apart from that backup is able to backup info/server/client computers that are outside your LAN, I’ve a costumer in USA and live in Portugal, every day I backup their info over the net in a secure and efficient way, using plain broadband services (200KB/s).

Cheers

Pedro OliveiraPS – About file systems, I’m using ext3 for production and testing with ext4, witch is performing better than ext3, I used to have reiserfs (witch is faster) but it worries me the lack of maintenance or effort the community is putting into it.

-

Hi!

Great article! You are right. I have evaluated some different open source backup software recently and came to the same conclusion regarding bacula. Very competent, but difficult to manage. To difficult for our needs with about 15 windows and linux boxes. I only have tested BackupPC for a few hours, but it seems really promising. The only thing I found so far is it is better to use rsyncd instead of SMB even for windows machines.

/Per-Ola

-

We’ve been using backuppc for over a year now and it has worked perfectly so far.

I think they key advantages are that it’s written with the unix thought in mind, use existing tools that just work e.g. rsync, ssh.I am using it with reiserfs3 and never had any problems. It’s true that there aren’t any huge developments but right now kernel devs are working on removing the BKL from reiser so *it is* still maintained and is just the best for a file system containing lots of small files (our backuppc installation has *lots* of small files).

-

I’ve been using BackupPC for over 5 years now and it has never let me down. The web-interface in 3.x really is all you need to manage a very capable backup system, much easier to use than Bacula’s.

As for filesystems, ext3 isn’t all that bad, except for when the pool is being purged of old files during the night. I achieved best results with JFS, XFS and (recently) ext4. I might try BtrFS in the future, but it seems it still has a long way to go before it’s stable enough for the thrashing BackupPC is going to do to it.

One more hint though: Stay away from LVM2 on the server running BackupPC! If something does go wrong with the harddisk in the RAID on top of logical volumes, it really serves to ruin your last chance of rescuing any data at all.

-

I know this article is old and might be close to a fossil…but I bumped into it today. Why, because I started to use bacula AND backupPC in tandem.

Why? Because they aim two different goals. I DO use tapes! RAID archiving is NOT a disaster recovery solution… maybe disaster prevention but definitely not a recover solution. In fact some RAID levels, like 5 are very dangerous (check BAARF movement) as it is possible to lose or corrupt an entire array without a single disk failure… silently! I’m now using RAID 10 for critical data.. but I digress..I personally do multiple levels of backup. I use snapshoting of virtual machines and backupPC of physical for immediate recovery and then archive the whole set onto a tape library, and this is where bacula is really good at. It does manage my volumes very nicely and even makes use of the barcode feature of the tape library. I was using a proprietary solution for the last 5 years and was getting tired of its limitations. If I eventually end up virtualizing the remaining 2% of our 50+ servers I might end up discontinuing BackupPC but not Bacula.

As for BackupPC, the dedup feature is very nice, but “materializing” a backup to a full filesystem is somewhat I/O intensive. It reals gets my 6TB RAID array to its knees, but its really fast for recovering “that” file that someone deleted by mistake.

-

Horses for courses I think. I’ve used BackupPC for many years at home and look at what else is out there in the open source market every couple of years.

My two pet annoyances with BackupPC are firstly, that it has a tendency to give strange tar errors (they don’t match the exact error the command line gives for the same tar command over SSH) making initial backups sometimes hard to setup. Secondly, I cannot find a facility in BackupPC for showing the full list of files that was backed up in a particular session. Browsing the backups tends to ‘fill’ from the last full backup (as I have mine set to do this) and so isn’t very useful for auditing.

Still, with a little bit of blind faith that ‘no error email = successful backups’, BackupPC has been much nicer. I will probably shift as my underlying storage environment becomes more heterogeneous (I am starting to use tapes more) as managing different storage pools in Bacula seems easier.

-

22 comments

Comments feed for this article

Trackback link: https://www.tolaris.com/2009/10/08/replacing-bacula-with-backuppc/trackback/