Update 2012-01-18: This guide has been updated for recent changes, and is safe to use on current Ubuntu and Debian releases.

One of the reasons I started this blog is to write about problems I’ve solved that I can’t find answers to already on the web. Today, let’s talk about moving your linux install to linux software raid (md raid / mdadm). This post assumes you are running Ubuntu Linux 8.04, but any Debian-based distro from the past two years, or most other distros, will have similar commands.

We start with an install on a single 80 GB SATA drive, partitioned as follows:

/dev/sda1 as /, 10GB, ext3, bootable

/dev/sda2 as swap, 1GB

/dev/sda3 as /home, 69GB, ext3

We want to add a second 80GB SATA drive and move the entire install to use RAID1 between the two drives. So the final configuration will appear:

/dev/md0 as /, 10GB, ext3

/dev/md1 as swap, 1GB

/dev/md2 as /home, 69GB, ext3

Where the raid arrays are:

md0 : active raid1 sda1[0] sdb1[1]

md1 : active raid1 sda2[0] sdb2[1]

md2 : active raid1 sda3[0] sdb3[1]

Here there be dragons. As always, back up your data first. If you don’t know how to use rsync, now is an excellent time to learn.

The general plan is:

- Partition the new drive

- Create RAID arrays and filesystems on the new drive

- Copy the data from drive 1 to the new RAID arrays on drive 2

- Install grub on drive 2

- Configure fstab and mdadm.conf, and rebuild initramfs images

- Reboot on the RAID arrays on drive 2

- Repartition drive 1 and add it to RAID

All commands are run as root. Use sudo if you prefer.

Step 1: Partition the new drive

Assuming you want to partition the second drive the same way as the first, this is easy. Just clone the partitions from /dev/sda to /dev/sdb:

sfdisk -d /dev/sda | sfdisk /dev/sdb

You may want to format the new disk first to clear it of old data, especially if it previously had software RAID partitions on it. You may get unusual results if it has a similar partition structure or RAID setup as the original disk.

Now use parted to mark the partitions for software RAID, with the first partition to boot:

parted /dev/sdb

(parted) toggle 1 raid

(parted) toggle 2 raid

(parted) toggle 3 raid

(parted) toggle 1 boot

(parted) print

Disk /dev/sdb: 80.0GB Sector size (logical/physical): 512B/512B Partition Table: msdos Number Start End Size Type File system Flags 1 0.51kB 10.0GB 10.0GB primary ext3 boot, raid 2 10.0GB 11.0GB 2000MB primary linux-swap raid 3 11.0GB 80.0GB 69.0GB primary ext3 raid (parted) quit

Parted will show you a file system in each partition, but the reality is that they will be plain linux software raid partitions (type 0xfd):

fdisk -l /dev/sdb

Disk /dev/sdb: 80.0 GB, 80026361856 bytes 255 heads, 63 sectors/track, 9729 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0xa3181e57 Device Boot Start End Blocks Id System /dev/sdb1 * 1 1216 9765625 fd Linux RAID autodetect /dev/sdb2 1216 1338 976562+ fd Linux RAID autodetect /dev/sdb3 1338 9730 67408556 fd Linux RAID autodetect

Step 2: Create RAID arrays and filesystems on the new drive

Now we create RAID 1 arrays on each partition. These arrays will have just one member each when we create them, which isn’t normal for RAID 1. So we’ll have to force mdadm to let us:

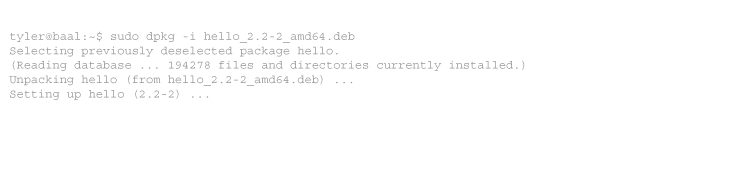

apt-get install mdadm

mdadm --create /dev/md0 --level=1 --force --raid-devices=1 /dev/sdb1

mdadm --create /dev/md1 --level=1 --force --raid-devices=1 /dev/sdb2

mdadm --create /dev/md2 --level=1 --force --raid-devices=1 /dev/sdb3

Next, you’ll need to create filesystems on the new RAID devices. Assuming the same formats as your first partition:

mkfs.ext3 /dev/md0

mkswap /dev/md1

mkfs.ext3 /dev/md2

Step 3: Copy the data from drive 1 to the new raid arrays on drive 2

This is a job for rsync. First, there are some directories on a running Linux system that we do not want to copy, like /dev and /proc. We also want to ignore tempfs directories, like /var/run. The best way to avoid these is to make an excludes file. Create a file, /root/rsync-filter, with the following content.

If running Ubuntu 11.10 or newer:

- /dev/

- /home/*/.gfvs

- /lib/modules/*/volatile/

- /mnt/

- /proc/

- /run/

- /sys/

If running earlier releases:

- /dev/

- /home/*/.gfvs

- /lib/modules/*/volatile/

- /mnt/

- /proc/

- /sys/

- /var/lock/

- /var/run/

These lines define directories we will not copy over. You may wish to add /tmp, apt’s cache, etc, but if you do you must manually create the directories themselves on the new filesystem.

Mount the new RAID array:

mount /dev/md0 /mnt

mkdir /mnt/home

mount /dev/md2 /mnt/home

If you are using a different mount structure, just be sure to recreate it and mount it in the right places in the new filesystem under /mnt/.

And start the rsync copy:

rsync -avx --delete -n --exclude-from /root/rsync-filter / /mnt/

rsync -avx --delete -n /home/ /mnt/home/

You will see a list of files that will be changed, but nothing actually happens. This is the job of the -n argument, which performs a dry-run. Always do this before actually starting a copy. You WILL make a painful mistake with rsync some day, so learn to be cautious. Repeat the above commands without the -n when you are sure all is well.

The -x argument ensures that you will not cross filesystem boundaries, which is why you must copy /home separately, and any other mounted filesystems. If you omit this you only need one command. But make sure you have a good rsync filter file, and that you have nothing mounted like /media/cdrom that you don’t want an archive of.

Finally, create the directories that you skipped with your filter. If running Ubuntu 11.10 or newer:

cd /mnt/

mkdir -p dev/ mnt/ proc/ run/ sys/

for i in /lib/modules/*/volatile ; do mkdir -p /mnt/$i ; done

If running earlier releases:

cd /mnt/

mkdir -p dev/ mnt/ proc/ sys/ var/lock/ var/run/

for i in /lib/modules/*/volatile ; do mkdir -p /mnt/$i ; done

Step 4: Install grub on drive 2

OK, we almost have a working RAID install on the second drive. But it won’t boot yet. Let’s use chroot to switch into it.

mount --rbind /dev /mnt/dev

mount --rbind /proc /mnt/proc

chroot /mnt

Now we have a working /dev and /proc inside the new RAID array, and by using chroot we are effectively in the root of the new array. Be absolutely sure you are in the chroot, and not the real root of drive 1. Here’s an easy trick: make sure nothing is in /sys:

ls /sys

If not, you’re in the new chroot.

Now we need to update grub to point to the UUID of the new filesystem on the RAID array. Get the UUID of /dev/md0’s filesystem:

tune2fs -l /dev/md0 | grep UUID

Filesystem UUID: 8f4fe480-c7ab-404e-ade8-2012333855a6

We need to tell grub to use this new UUID. How to do this depends on which version of grub you are using.

Step 4.1: If you are using GRUB 2, which is the default on Ubuntu since 9.10 Karmic Koala:

Edit /boot/grub/grub.cfg and replace all references to the old UUID with the new. Find all the lines like this:

search --no-floppy --fs-uuid --set 9e299378-de65-459e-b8b5-036637b7ba93

And replace them with the new UUID for /dev/md0:

search --no-floppy --fs-uuid --set 8f4fe480-c7ab-404e-ade8-2012333855a6

Do not run grub-mkconfig, as this will undo your changes. Install grub on the second drive.

grub-install /dev/sdb

Go on to step 5 below.

Step 4.2: If you are using GRUB 1 (Legacy), which is the default on Ubuntu before 9.10 Karmic Koala:

Edit grub’s device.map and menu.list files. Edit /boot/grub/device.map and make sure both drives are listed:

(hd0) /dev/sda (hd1) /dev/sdb

Edit /boot/grub/menu.list and find the line like this:

# kopt=root=UUID=9e299378-de65-459e-b8b5-036637b7ba93 ro

Replace the UUID with the one you just found. Leave the line commented, and save the file. Now rebuild menu.lst to use the new UUID:

update-grub

Double-check that each boot option at the bottom of menu.lst is using the right UUID. If not, edit them too. Finally, install grub on the second drive.

grub-install /dev/sdb

Step 5: Configure fstab and mdadm.conf, and rebuild initramfs images

We’re almost ready to reboot. But first, we need to build an initramfs that is capable of booting from a RAID array. Otherwise your boot process will hang at mounting the root partition. Still in the chroot, edit /etc/fstab and change the partition entries to the new filesystems or devices.

If your /etc/fstab has “UUID=” entries like the following, change them to the new entries:

proc /proc proc defaults 0 0 UUID=8f4fe480-c7ab-404e-ade8-2012333855a6 / ext3 relatime,errors=remount-ro 0 1 UUID=c1255394-4c42-430a-b9cc-aaddfb024445 none swap sw 0 0 UUID=94f0d5db-c525-4f52-bdce-1f93652bc0b1 /var/ ext3 relatime 0 1 /dev/scd0 /media/cdrom0 udf,iso9660 user,noauto,exec,utf8 0 0

In the above example, the first UUID cooresponds to /dev/md0, the second to /dev/md1, and so on. Find the UUIDs with:

/lib/udev/vol_id /dev/md0

If your distribution uses a newer udev, you may not have the vol_id command. Use:

/sbin/blkid /dev/md0

If your /etc/fstab has “/dev/sda1” entries like it’s a bit easier. Just change them to /dev/md0 and so on:

proc /proc proc defaults 0 0 /dev/md0 / ext3 relatime,errors=remount-ro 0 1 /dev/md1 none swap sw 0 0 /dev/md2 /var/ ext3 relatime 0 1 /dev/scd0 /media/cdrom0 udf,iso9660 user,noauto,exec,utf8 0 0

Now, while still in the chroot, edit /etc/mdadm/mdadm.conf to list each RAID array:

DEVICE partitions ARRAY /dev/md0 UUID=126a552f:57b18c5b:65241b86:4f9faf62 ARRAY /dev/md1 UUID=ca493308:7b075f97:08347266:5b696c99 ARRAY /dev/md2 UUID=a983a59d:181b32c1:bcbb2b25:39e64cfd MAILADDR root

Find the UUID of each RAID array, which is not the same as the UUID of the filesystem on it (!), using mdadm:

mdadm --detail /dev/md0 | grep UUID

Now, rebuild your current kernel’s initramfs image:

update-initramfs -u

Or all of them:

update-initramfs -u -k all

Step 6: Reboot on the RAID arrays on drive 2

Now we’re ready to reboot. First, exit the chroot and power off the machine cleanly. You have three options:

- If your BIOS allows you to select which drive to boot from, elect to boot from drive 2.

- Swap drives 1 and 2 so drive 2 becomes /dev/sda, and restart

- Use a USB recovery stick to boot from drive 2

When the system restarts, you should reboot on the new RAID drive. Make sure:

df -h

Filesystem Size Used Avail Use% Mounted on /dev/md0 9.2G 1.9G 6.9G 21% / varrun 498M 56K 498M 1% /var/run varlock 498M 0 498M 0% /var/lock udev 498M 68K 498M 1% /dev devshm 498M 0 498M 0% /dev/shm lrm 498M 39M 459M 8% /lib/modules/2.6.24-19-generic/volatile /dev/md2 63G 130M 60G 1% /home

Step 7: Repartition drive 1 and add it to RAID

Finally, we add the old drive into the array. Assuming you chose option 1 and didn’t swap the drive’s cables:

sfdisk -d /dev/sdb | sfdisk --force /dev/sda

If you receive a warning message here, reboot now.

Re-reading the partition table ... BLKRRPART: Device or resource busy The command to re-read the partition table failed Reboot your system now, before using mkfs

If not, continue on:

mdadm /dev/md0 --grow -n 2

mdadm /dev/md1 --grow -n 2

mdadm /dev/md2 --grow -n 2

mdadm /dev/md0 --add /dev/sda1

mdadm /dev/md1 --add /dev/sda2

mdadm /dev/md2 --add /dev/sda3

If you chose option 2 and your drives now have a different ordering, swap “/dev/sda” and “/dev/sdb” everywhere above.

The RAID array will now rebuild. To check its progress:

cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid1 sdb1[1] sda1[0]

9767424 blocks [2/2] [UU]

md1 : active raid1 sdb1[1] sda1[0]

1951808 blocks [2/2] [UU]

md2 : active raid1 sdb2[2] sda2[0]

68693824 blocks [2/1] [U_]

[>....................] recovery = 0.4% (16320/68693824) finish=22.4min speed=8160K/sec

unused devices:

Once the array is finished rebuilding, reinstall grub on the new drive. We’ll do both, for good measure.

grub-install /dev/sda

grub-install /dev/sdb

You should now be able to reboot without either drive, and your system will come up cleanly. If you ever need to replace a failed drive, remove it, use step 7 above to clone the partition scheme to the new drive and add it to the array.

Gotchas:

If you are having issues with your RAID setup, especially if you have an older RAID setup or older release already, you might need these packages:

apt-get install lvm2 evms dmsetup

-

sfdisk -d /dev/sda | /dev/sdb

doesn’t work for me on CentOS 5.2

sfdisk -d /dev/sda | sfdisk /dev/sdb

works as expected -

Before mounting md0 and md2 and copying files to them

they may need to be formatted (right after step 2)

mkfs.ext3 /dev/md0; mkfs.ext3 /dev/md2For impatient copying in step 3 can be shortened to:

cp -ax / /mnt

cp -ax /home /mnt/home

This will preserve directories used for mounting like mnt, sys, proc, cdrom, … -

Same setup (Ubuntu 8.04), but my problem for the past few days was 2-fold:

1st at the end of step 4, grub-install didn’t work (hung), so I manually ran grub and entered:

device (hd1) /dev/sdb

root (hd1,0)

setup (hd1)

quitworked, but then on reboot at step 7 and selecting the second drive as the boot drive through the BIOS, the boot process would hang, dropping me to initramfs. At this point, I could mount /dev/md0 by

mdadm -A /dev/md0 /dev/sdb1

for each RAID partition, then exit from initramfs. Everything would boot then. After too many attempts at resolving this, I found that the problem was resolved by allowing the boot sequence to drop to initramfs, and enter

mdadm -As –homehost=” –auto=yes –auto-update-homehost

exit initramfs and allow the boot to complete. Reboot once (or twice), and everything works. From what I understand (noob here), when the RAID1 was created, the homehost= in mdadm.conf used by mdadm during the boot process didn’t match what was in the properties written to the RAID partition when it was created resulting in arrays not being mounted automatically. Several days of debugging were summarized in that simple line…

That, and to read about the problem with degraded RAID arrays here:

https://help.ubuntu.com/community/DegradedRAID -

Marvelous article. Compact and with rich information.

Unfortunately I have the most weird problem (in 8.04 server):

After creating the raid arrays, rebooting successfully growing the raid (1 for me) arrays to include the second (sdb1, sdb2 etc…) devices, the system cannot boot (md devices are stopped during boot). It might have something to do with the order the modules are loaded (the sda and sdb sit in different controllers with different drivers), but it might have something to do with a mix in md arrays and sd devices. For example I have sda9-sdb9 that belong to md6 but the mdadm reports for the sda member:

mdadm -E /dev/sda9

/dev/sda9:

Magic : a92b4efc

Version : 00.90.00

UUID : 61b6692d:3900807a:5e76b0e2:85a6fbc1 (local to host TransNetStreamer)

Creation Time : Sat Jan 10 17:13:51 2009

Raid Level : raid1

Used Dev Size : 9735232 (9.28 GiB 9.97 GB)

Array Size : 9735232 (9.28 GiB 9.97 GB)

Raid Devices : 2

Total Devices : 1

Preferred Minor : 6Update Time : Sun Jan 11 20:15:26 2009

State : clean

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Checksum : bb676a86 – correct

Events : 0.760Number Major Minor RaidDevice State

this 0 8 9 0 active sync /dev/sda90 0 8 9 0 active sync /dev/sda9

1 1 0 0 1 faulty removed

while for the sdb member:

mdadm -E /dev/sdb9

/dev/sdb9:

Magic : a92b4efc

Version : 00.90.00

UUID : 61b6692d:3900807a:5e76b0e2:85a6fbc1 (local to host TransNetStreamer)

Creation Time : Sat Jan 10 17:13:51 2009

Raid Level : raid1

Used Dev Size : 9735232 (9.28 GiB 9.97 GB)

Array Size : 9735232 (9.28 GiB 9.97 GB)

Raid Devices : 2

Total Devices : 2

Preferred Minor : 6Update Time : Sun Jan 11 15:22:22 2009

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Checksum : bb67209c – correct

Events : 0.66Number Major Minor RaidDevice State

this 1 8 25 1 active sync /dev/sdb90 0 8 9 0 active sync /dev/sda9

1 1 8 25 1 active sync /dev/sdb9different things. HOW IS THAT possible ?

Again thanks for your time

-

Hi,

Thanks for this document. I agree with Wes that it is best to format your disk right after step 2 if it already has data on it. I neglected to do that and on reboot it gave me this error:

The filesystem size (according to the superblock) is nnn blocks

The physical size of the device is yyy blocks

Either the superblock or the partition table is likely to be corrupt!

Abort?Of course this makes perfect sense as now both the original partition and the raid disk had their own superblocks on there. Formatting the md(x) partitions and rerunning the rsync solved that.

-

What a great post! I’m actually familiar with all the pieces, but it’s terrific to have them put together in a road map.

Surprised the original Centos poster did not call out that the update-initramfs equivalent on Redhat/Centos/Fedora would be mkinitrd. -

Thanks for the very informative guide!

I recently had to move my root file system from one pair of Raid1 disks to another pair of Raid1 disks, and was totally non-plussed to find that although grub appeared to be working, I would get dumped into a BusyBox prompt by initramfs.

Although the BusyBox prompt didn’t say, I found I could run mdadm from there, and found that the UUID of the Raid1 filesystem was different from the new one I had prepared.

After a bit of googing, I found this page which pointed me in the direction of a fix.

Using your chroot method and update-initramfs worked like a charm.gracia amigo.

-

rather than rsync, I recommend dump. It’ll properly transfer everything on your disk, like extended security attributes, and hard linked files, and automatically avoid anything weird. Just precede this with a mkfs, and you can be sure that everything is clean (dump|restore does not delete files, only add them)

cd /

dump -0 -f – / | (cd /mnt && restore -rf -)-

A gem among the internet sir. I am going to clone this on my wiki for personal storage (with a link to this site!) but this saved me alot of time.

The newer grub has a different file though. grub.cfg if I remember.

Still. Perfect.

-

Quote:

”

Edit /boot/grub/menu.list and find the line like this:# kopt=root=UUID=9e299378-de65-459e-b8b5-036637b7ba93 ro

Replace the UUID with the one you just found. Leave the line commented, and save the file. Now rebuild menu.lst to use the new UUID:

”You want to edit the /boot/grub/grub.cfg and replace the many UID entries there instead.

I may still keep the old instructions for the old grub though?

-

Great guide! Thanks.

One thing that happend to me was that I got an error message when copying .gvfs in home directory. I incuded /home/*/.gvfs/ in the filter list and all went well (Ubunti 10.04 LTS)-

Excellent article, each point is explained nicely. I was able to mirror my RHEL 6.2 OS disks on the very first go. Many thanks for your efforts to write this article.

The only thing which I did not understand is why names of my RAID devices keeps on changing after reboots. Earlier they were,

/dev/md0 – /

/dev/md1 – /home

/dev/md2 – swapAfter reboot for some strange reasons

/dev/md127 – /

/dev/md126 – /home

/dev/md125 – swapThe order (125, 126, 127) keeps on changing after each reboot.

Any ideas to overcome this ?

-

I did the same with no luck :(

-

Thanks for this article! I have been working on moving over to a raid1, and have just almost gotten it working. I’m so close I can taste it. The machine will now boot from the raid, but I am trapped at the login screen. After entering my password, I just get dumped back to the login screen again, whether I try to login as my admin or regular account. I can only login as a guest. The original HDD is not part of the array, so I can switch back to it easily. On the old setup it logs in automatically, so I don’t know why the new setup is not doing the same, since all the files/settings should have copied over to the new drives, yes? Any suggestions would be greatly appreciated. I’m running Ubuntu 12.04. Thanks.

-

Thanks, Tyler. Turns out it was a pretty easy fix… once I finally thought about it enough. I was moving from a 250GB HDD that had nothing except the OS on it onto two raid1-ed 2TB drives, on which is a 50GB /, 2GB swap, and remainder for /home. Because my old drive had no data or anything I used on it, I had not been running the rsync for the /home partition, because I had simply been thinking that would copy over data. Once I thought about it, I realized that not syncing the /home directory was preventing the log in from working, so I ran the rsync and now it works fine.

The only problem I’m having now is that it won’t automatically boot when the raid is degraded. I’ve tried a few things, and for some reason it goes to the purple screen, and if I just blindly type “y” or “reboot”, then it boots degraded and I can rebuild the array. I’ve seen some bug reports on that, and I can’t seem to get it to go away. But at least I know it will boot degraded, I just have to remember to type stuff at the purple screen, even though I can’t see it asking me if I want to boot degraded or see any screen activity when I type. While it’s annoying, I really don’t care because I know I can boot when/if one of the drives fails. So now I am good enough to start copying over my photo collection and other files, set up my backupping, and enjoy the security of the raid system.

Again, thanks for the writeup, once I actually followed the whole set of instructions, it worked great!

-

-

-

-

-

-

39 comments

Comments feed for this article

Trackback link: https://www.tolaris.com/2008/10/01/moving-your-linux-root-partition-to-raid/trackback/